Implementating ECF in node.js

Overview

Purpose of the Document: This tutorial guides you through creating a Node.js-based web server that adheres to the ECF specification. By following these steps, you'll establish a connection between EIC and your target application, enabling data exchange and task execution.

Target Audience: This tutorial is intended for developers familiar with JavaScript and the basics of working with APIs. We'll assume you have a Google Cloud project set up and a Google Sheet containing role entitlement data.

Pre-requisite(s)

- Node.js: Download and install Node.js from the official website: nodejs.org

- Open API Specification: Download the ECF Open API specification file from GitHub: Open API Spec.

- IDE: Choose your preferred IDE (Integrated Development Environment) for Node.js development, such as Visual Studio.

Steps to generate node-js server bundle

- Open Swagger Editor: Launch the Swagger Editor in your web browser by visiting: swagger.io

- Import Open API Spec: In the editor window, click File > Import File and select the downloaded OpenAPISpec.yaml file.

- Render API Specification: The API specification should be displayed on the right panel.

- Generate Server Code: Go to Generate Server > nodejs-server.

- Download Server Bundle: This action will generate a ZIP file containing the Node.js server code.

Steps to start node-js server

- Unzip Server Bundle: Extract the downloaded ZIP file to create your Node.js project directory.

- Navigate to Project Directory: Open your terminal or command prompt and navigate to the unzipped project directory.

- Run Server: Execute the command npm start to start the server.

- Server Confirmation: Upon successful execution, you should see a message similar to:

Your server is listening on port 8080 (http://localhost:8080)

Swagger-ui is available on <b>[/docs](http://localhost:8080/docs)</b>

This indicates that your Node.js server is running and accessible.

Steps to explore the API Specification

- Open Web Browser: Launch your web browser and navigate to /docs.

- API Spec Listing: This page will display a list of all API specifications supported by the ECF.

- Explore Individual APIs: Click on a specific API to explore its details, including request types, request parameters, body structure, and response formats. Utilize the "Try it out" option to test the API with sample data (note: you may need to provide a valid authorization token for some APIs).

Deploying the Server

This section guides you through launching your Node.js server locally and customizing its configuration. It also briefly touches upon considerations for production deployment.

Starting the Server Locally

- Open Terminal: Launch your terminal or command prompt application.

- Navigate to Project Directory: Use the cd command to change directories and navigate to the root directory of your unzipped project.

- Run Server: Execute the command npm start to initiate the server.

- Server Confirmation: Upon successful execution, you'll see a message similar to:

Your server is listening on port 8080 (http://localhost:8080)

Swagger-ui is available on [/docs](http://localhost:8080/docs)

This indicates that your Node.js server is running locally on port 8080 and accessible at /docs. You can open this URL in your web browser to explore the available APIs using Swagger UI.

Customizing Server Configuration (Optional):

By default, the server runs on port 8080 using the HTTP protocol. Here's how to modify these settings:

- Locate

package.json: Open thepackage.jsonfile within your project directory using a text editor. - Edit Configuration: Search for the scripts section within the file. You'll find properties like

startthat define commands used to start the server. Look for properties controlling port and protocol configurations (these might vary depending on the generated server code). - Update Values: Modify the values for port and protocol as needed. Common properties might include

"start": "node index.js -p <port number>", where<port number>is the desired port. Refer to the specific documentation for your generated code if the properties have different names. - Save Changes: Save your modifications to the

package.jsonfile. - Restart Server: After making changes, stop the server using Ctrl+C in your terminal and then restart it using npm start again. The server will now run with your updated configuration.

Using HTTPS (Optional)

In production environments, it's crucial to secure communication between your server and clients. The HTTPS protocol encrypts data transmission, protecting sensitive information and ensuring data integrity. This section provides a high-level overview of enabling HTTPS with a self-signed certificate (for development purposes only).

Important Note: Using a self-signed certificate is not recommended for production due to security concerns. Browsers will display warnings to users when they encounter self-signed certificates. For production environments, you should obtain a valid certificate issued by a trusted Certificate Authority (CA).

Enabling HTTPS with Self-Signed Certificate (Development Only):

-

Generate a Self-Signed Certificate:

- Install OpenSSL if it's not already available on your system. You can find installation instructions online for your specific operating system.

- Run the following command in your terminal to generate a self-signed certificate and key:

openssl req -nodes -new -x509 -keyout server.key -out server.cert- Follow the prompts to provide information like your organization name and location. This information is embedded within the certificate.

-

Place the Certificate and Key Files:

- Move the generated

server.keyandserver.certfiles to your project's base directory.

- Move the generated

-

Configure

index.jsfor HTTPS:- Locate the

index.jsfile in your project. - Update the code to use the generated certificate and key for HTTPS. Here's an example:

const fs = require("fs");

var https = require('https');

var serverPort = 443; // you can specify the port number

// add certificate details in options:

var options = {

routing: {

controllers: path.join(__dirname, './controllers')

},

key: fs.readFileSync("server.key"),

cert: fs.readFileSync("server.cert"),

};

// pass the options while creating server

https.createServer(options, app).listen(serverPort, function () {

console.log('Your server is listening on port %d (https://localhost:%d)', serverPort, serverPort);

console.log('Swagger-ui is available on https://localhost:%d/docs', serverPort);

});Explanation: The code snippet creates a secure HTTPS server using Node.js. It configures the server with:

- Port: Listens for incoming requests on the specified port (default 443).

- Routing: Points to the directory containing your application's controllers for handling requests.

- Security: Uses a server key and certificate for secure HTTPS connections. It then starts the server and logs messages about its availability and the location of documentation.

- Locate the

-

Start the Server:

- Run npm start in your terminal to launch the server with HTTPS enabled.

-

Access the Server:

- You can now access your server securely using HTTPS. Open

https://localhost:<port number>/docs(replace <port number> with the port you specified) in your web browser. However, due to the self-signed certificate, your browser will likely display a warning message. This is expected for development purposes.

Remember: This approach is for development and testing only. In production, you should obtain a valid certificate from a trusted CA to ensure secure communication and avoid browser warnings.

- You can now access your server securely using HTTPS. Open

Customizing the Connector for Target Application Integration

Once you've generated the Node.js server project and imported it into your preferred IDE (like Visual Studio), you can customize it to integrate with your specific target application. This customization process involves establishing a connection, data exchange, and endpoint exposure as outlined in the ECF specification.

Key Objectives:- Target Application Connection: Develop logic to establish a secure connection with your target application using appropriate authentication methods and protocols.

- Data Fetching and Provisioning: Implement functionality to retrieve data from the target application based on ECF API requests. Conversely, you might need to implement functionalities to provision data to the target application as dictated by ECF API calls.

- REST Endpoint update: Update the REST endpoints that adhere to the ECF specification. These endpoints will be invoked by EIC to interact with your custom connector and facilitate data exchange.

- Utilize Third-Party Libraries: Depending on your target application's API and communication protocols, you might leverage Node.js libraries like axios for making HTTP requests or libraries like socket.io for real-time communication.

- Data Transformation and Validation: Implement logic to handle potential data format differences between your connector and the target application. You might need to transform or validate data before sending it to or receiving it from the target application.

- Error Handling and Logging: Design a robust error handling mechanism to capture and log any issues encountered during communication with the target application or data processing. This will aid in troubleshooting and debugging.

Additional Notes: Refer to the ECF specification documentation for detailed information about supported API calls, request structures, response formats, and authentication mechanisms. Secure your connector implementation by following best practices for handling sensitive data and credentials.

Remember: This section provides a high-level overview of the customization process. The specific implementation details will vary depending on your target application and the functionality required by the ECF integration.

Implementing Bearer Token Authentication (Optional)

The default server implementation includes bearer token authentication for all API calls. Any request without an authorization header will result in a failed request. However, since this is a sample specification, there's no defined token value. You can provide any random value for authorization during development.

Important Note: In a real-world scenario, static token values pose a security risk. You should implement a proper authorization mechanism to secure your server.

Example: Modifying controllers/AccountImport.js

This example demonstrates how a developer might implement a basic (and insecure) authentication check by modifying the controllers/AccountImport.js file:

module.exports.postAccounts = function postAccounts (req, res, next, body, offset, pagesize) {

// sample custom code here to perform authorization

const authHeader = req.headers['authorization'] &&

req.headers['authorization'].toLowerCase();

if (!(authHeader.toLowerCase() === "bearer <token value>")) {

throw Error(`Authorization token not correct`);

}

// sample code completed

AccountImport.postAccounts(body, offset, pagesize)

.then(function (response) {

utils.writeJson(res, response);

})

.catch(function (response) {

utils.writeJson(res, response);

});

};

- The code retrieves the Authorization header from the incoming request.

- It checks if the header exists and is in lowercase format "bearer <token value>".

- It compares the extracted token value with a pre-defined static value (your_actual_token_value).

- If there's a mismatch, an error is thrown.

- If the token matches (insecure in production), the code proceeds with default functionality.

Remember: This is a simplified example for demonstration purposes only. In a production environment, you should implement a robust authorization mechanism. This might involve:

- Issuing tokens to authorized users or applications.

- Validating tokens against a centralized authentication server.

- Implementing token expiration and refresh mechanisms.

For production-grade security, explore established authentication frameworks and libraries for Node.js, such as Passport.js or JSON Web Tokens (JWT).

Bearer Token Encoding and Decoding (Optional)

While the previous section discussed basic authentication using a static token value, real-world scenarios require more secure approaches. Bearer tokens are commonly used for authorization in REST APIs. These tokens are typically issued by an authorization server and contain encoded information about the user or application making the request.

Understanding Bearer Tokens:- Bearer tokens are typically sent in the Authorization header of an HTTP request with the format Bearer

<token value>. - The token value itself might be a Base64-encoded string containing user information, claims, and an expiration timestamp.

- The server receiving the request decodes the token and validates its claims against an authorization server or a local token store.

Important Note: Base64 encoding does not provide encryption. It simply encodes binary data into a human-readable format. To ensure token security, you should:

- Use a library like jsonwebtoken (JWT) to create and validate tokens signed with a secret key. JWT tokens are self-contained and include expiration times, making them a secure choice for authorization.

- Store secret keys securely (e.g., environment variables) and never expose them in your code.

- Implement token refresh mechanisms to handle token expiration.

Example: Base64 Encoding/Decoding (for illustration only)

This example demonstrates basic Base64 encoding and decoding, which can be a preliminary step for working with tokens. However, it's crucial to understand that this approach is not secure for production use.

const stringToEncode = 'Hello@12345$';

// Encoding the string to Base64 using ASCII encoding

const encodedString = Buffer.from(stringToEncode).toString('base64');

console.log('Base64 Encoded (UTF-8):', encodedString);

const decodedString = Buffer.from(encodedString, 'base64').toString('utf-8');

console.log('Base64 Decoded:', decodedString);

Remember: This section provides a high-level overview. For secure token management, explore established libraries like jsonwebtoken and follow best practices for token generation, validation, and storage. Refer to the documentation of these libraries for detailed usage instructions.

Implementing Logging for Debugging and Monitoring

Adding a logging mechanism to your custom connector is essential for debugging, troubleshooting, and monitoring its behavior. Logs provide valuable insights into request/response data, errors, and application events.

Choosing a Logging Library:Node.js offers a variety of logging libraries. This example utilizes the popular winston library due to its flexibility and ease of use.

Steps to Add Logging:- Install

winston:

npm install winston

-

Create a

logger.jsfile:- Create a new file named logger.js within your project's utils folder (or a similar location for utility functions).

- Add the following code to configure a basic Winston logger:

const winston = require('winston');

const logger = winston.createLogger({

level: 'info', // Set the minimum log level (e.g., 'info', 'warn', 'error')

format: winston.format.combine(

winston.format.timestamp(),

winston.format.printf(log => {

return `<span class="math-inline">\{log\.timestamp\} \[</span>{log.level}] - ${log.message}`;

})

),

transports: [

new winston.transports.Console(), // Log to console

new winston.transports.File({ filename: 'application.log' }) // Log to file

]

});

module.exports = logger;- This code defines a logger that outputs messages to the console and a file named application.log in the project's base directory. You can customize the log level (e.g., debug, info, warn, error) to control the detail of logged messages.

-

Utilize the Logger in Your Controllers:

- Import the logger instance into your controller files (e.g.,

controllers/AccountProvisioning.js). - Use the logger to record messages at appropriate points in your code:

const logger = require('../utils/logger');

module.exports.postCreateAccount = function postCreateAccount (req, res, next, body) {

logger.info("Calling create accounts API with request body:", body);

// ... your existing code here ...

};- In this example, the code logs an informational message before processing the apiV1CreateAccountPOST request. You can add additional log statements throughout your code to track data flow, errors, and other events. Benefits of Logging: Debugging: Logs help identify issues during development and troubleshooting. Monitoring: Analyzing log files provides insights into application behavior and potential problems. Auditing: Logs can be used to track user activity and API usage.

- Import the logger instance into your controller files (e.g.,

Remember: This is a basic example. You can explore advanced Winston features like custom log levels, transport options, and formatting to tailor your logging needs.

Implementing Business Logic and Validations

Your custom connector will likely require various business logic and data validations to ensure data integrity and handle edge cases. This section provides a general overview and an example.

Understanding Business Logic:- Business logic refers to the application-specific rules that govern how your connector processes and manipulates data.

- It might involve data transformations, validations, error handling, and interaction with external systems.

- Validations ensure that incoming data adheres to expected formats and constraints.

- This helps prevent errors during data processing and integration with target applications.

Example: Validating firstName in Account Provisioning:

This example demonstrates how to validate the presence of a firstName parameter in the request body during account provisioning:

const { ApiError } = require('./errors'); // Assuming an error class is defined

exports.postCreateAccount = function(body) {

return new Promise(function(resolve, reject) {

if (!body.hasOwnProperty('firstName')) {

const errorResponse = new ApiError(400, "Missing 'firstname' parameter in request body");

reject(errorResponse);

}

// Rest of your code continues here...

});

};

- The code checks if the request body object has a property named firstName.

- If firstName is missing, an ApiError object is created with appropriate error code and message.

- The promise is rejected with the error object, signaling invalid data.

Remember: This is a basic example. You'll need to implement additional validations and business logic specific to your target application and ECF requirements.

Additional Considerations:- Error Handling: Develop a robust error handling mechanism to capture and handle errors gracefully. Define custom error classes or utilize existing libraries for error handling.

- Data Transformation: Your connector might need to transform data between the ECF format and the format expected by your target application.

- Pagination: Consider implementing support for pagination (using pageSize and offset parameters) for large datasets in import operations. Refer to the provided code samples in the appendix for guidance.

By incorporating business logic and validations, you ensure your custom connector operates reliably and handles data effectively within the ECF ecosystem.

Sample Code for Google Sheets Integration (Informational)

This section provides a high-level overview and code samples demonstrating how a custom connector might interact with Google Sheets. It's important to note that this is for informational purposes only. You'll need to adapt and secure the code based on your specific use case and Google Sheets API authentication requirements.

Prerequisites

-

A Google Cloud project with the Google Sheets API enabled.

-

Service account credentials created for your project and downloaded as a JSON file. Steps: Set up Google Sheets API:

- Enable the Google Sheets API for your project in the Google Cloud Console.

- Create service account credentials and download the JSON key file.

- Install Required Packages:

npm install googleapis fs readlineImplement Google Sheets Interaction: The provided code snippets demonstrate how to:

- Read data from specific ranges in a Google Sheet.

- Parse and format the data according to ECF response requirements (including offset and pagination for large datasets).

- Do not store your service account credentials directly in your code. Consider environment variables or a secure credential store.

- Refer to Google's documentation for secure authentication practices with the Google Sheets API: https://nishothan-17.medium.com/google-sheet-apis-using-google-cloud-platform-gcp-credentials-8bca55521af

Accounts Import

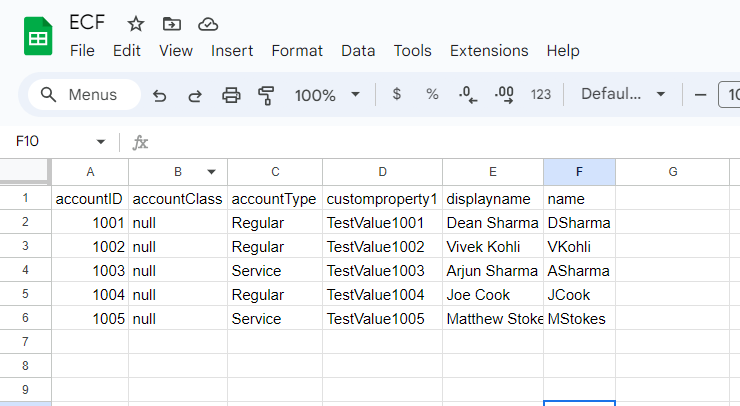

Refer to the figure below for a list of accounts present in your google sheet.

The provided code for readData is a good starting point for fetching account data from Google Sheets and transforming it into an ECF response format. Here's an improved version with explanations and security considerations:

const { google } = require('googleapis');

const fs = require('fs');

const readline = require('readline');

// Load credentials from JSON file

const credentials = require('./utils/external-connector-framework-09842e2d8788.json');

// Create a new JWT client using the credentials

const auth = new google.auth.JWT(

credentials.client_email,

null,

credentials.private_key,

['https://www.googleapis.com/auth/spreadsheets']

);

// Create a new instance of Google Sheets API

const sheets = google.sheets({ version: 'v4', auth });

// ID of the Google Sheet

const spreadsheetId = '1tZyExhNelfLaI68oBI3DwWly2p1xfY7VJ1k_CaOzoP0';

// Function to read data from the Google Sheet with offset and pageSize

async function readData(offset, pageSize) {

try {

const headerRange = 'Accounts!1:1'; // Range for header row

const headerResponse = await sheets.spreadsheets.values.get({

spreadsheetId,

range: headerRange,

});

const headers = headerResponse.data.values[0];

// Calculate range based on offset and pageSize

const dataStartRow = offset + 2; // Offset starts from data row 1 (after header)

const range = `Accounts!A${dataStartRow}:F${dataStartRow + pageSize - 1}`;

const response = await sheets.spreadsheets.values.get({

spreadsheetId,

range,

});

const rows = response.data.values;

if (rows.length) {

const totalCount = rows.length;

const accounts = rows.map(row =\> {

const account = {};

headers.forEach((header, index) =\> {

account[header] = row[index] || ''; // Ensure each header has a corresponding value

});

return account;

});

console.log(JSON.stringify({

offset: offset,

totalCount: totalCount,

accounts: accounts

}, null, 2));

} else {

console.log(JSON.stringify({

offset: offset,

totalCount: 0,

accounts: []

}, null, 2));

}

} catch (err) {

console.error('The API returned an error:', err);

}

}

// Call the function with offset and pageSize

readData(0, 2);

- The function now takes

spreadsheetIdas an argument, allowing for flexibility in reading data from different sheets. - Default values are provided for

offsetandpageSizefor clarity and optional customization. - Error handling is included to catch potential issues during API calls and data retrieval.

- The function returns an object containing

offset,totalCount, andaccountsin the ECF response format.

- Remember to implement the authenticate function securely, as mentioned in the previous section.

- Consider validating the

spreadsheetIdto prevent unauthorized access to unintended sheets. - Sanitize or validate user-provided input (e.g.,

offsetandpageSize) to mitigate potential injection attacks.

- You might want to add logic to handle scenarios where the number of rows returned exceeds the requested

pageSize. This could involve making additional API calls with adjusted offsets. - Explore advanced techniques like batching requests to improve performance when dealing with large datasets.

Role Entitlement Import:

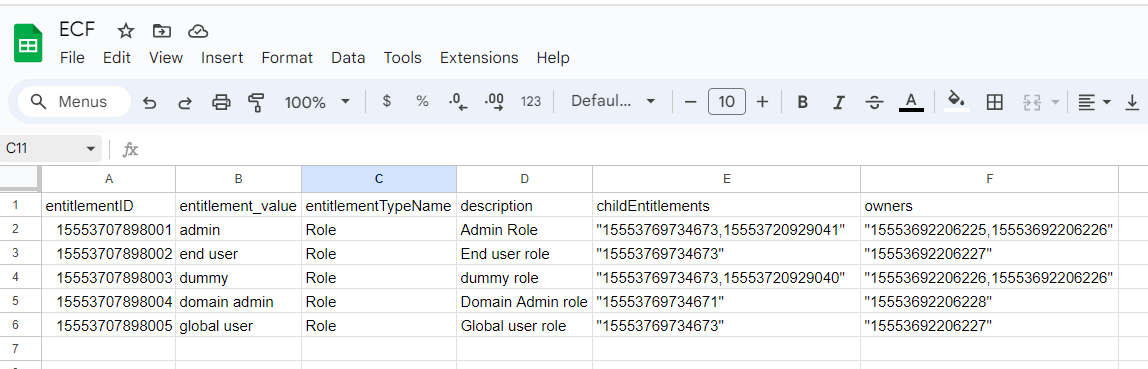

The provided image shows a sample role entitlement table in a Google Sheet. Each row represents a role, and columns contain details like role name, description, child entitlements (which are other roles), and owners (users or groups with access). Consider below is role entitlement data stored in sheet.

The code below reads the data in the sheet and transforms it into a response as per the API spec defined by ECF.

const { google } = require('googleapis');

const fs = require('fs');

const readline = require('readline');

// Load credentials from JSON file

const credentials = require('./utils/external-connector-framework-09842e2d8788.json');

// Create a new JWT client using the credentials

const auth = new google.auth.JWT(

credentials.client_email,

null,

credentials.private_key,

['https://www.googleapis.com/auth/spreadsheets']

);

// Create a new instance of Google Sheets API

const sheets = google.sheets({ version: 'v4', auth });

// ID of the Google Sheet

const spreadsheetId = '1tZyExhNelfLaI68oBI3DwWly2p1xfY7VJ1k_CaOzoP0';

// Function to read data from the Google Sheet with offset and pageSize

async function readData(offset, pageSize) {

try {

const headerRange = 'Entitlements_Roles!1:1'; // Range for header row

const headerResponse = await sheets.spreadsheets.values.get({

spreadsheetId,

range: headerRange,

});

const headers = headerResponse.data.values[0];

// Calculate range based on offset and pageSize

const dataStartRow = offset + 2; // Offset starts from data row 1 (after header)

const range = `Entitlements_Roles!A${dataStartRow}:F${dataStartRow + pageSize - 1}`;

const response = await sheets.spreadsheets.values.get({

spreadsheetId,

range,

});

const rows = response.data.values;

if (rows.length) {

const totalCount = rows.length;

const roles = rows.map(row =\> {

const role = {};

headers.forEach((header, index) =\> {

if (header === 'childEntitlements') {

// Parse multi-valued data into an array of objects

const childEntitlements = row[index].split(',').map(value =\> {

return { entitlementID: value.trim() };

});

role[header] = { roles: childEntitlements };

} else if (header === 'owners') {

// Parse multi-valued data into an array of strings

role[header] = row[index].split(',').map(value =\> value.trim());

} else {

role[header] = row[index];

}

});

return role;

});

console.log(JSON.stringify({

offset: offset,

totalCount: totalCount,

roles: roles

}, null, 2));

} else {

console.log(JSON.stringify({

offset: offset,

totalCount: 0,

roles: []

}, null, 2));

}

} catch (err) {

console.error('The API returned an error:', err);

}

}

// Call the function with offset and pageSize

readData(0, 2);

This code reads role entitlement data from a Google Sheet and converts it into a JSON format suitable for ECF (External Connector Framework).

Here's a breakdown of the steps:

- Authentication: It authenticates with the Google Sheets API using a service account.

- Data Retrieval: It retrieves data from a specified Google Sheet and defines the starting row and number of rows to read.

- Header Processing: It extracts the header row names from the sheet.

- Data Processing: It iterates through each data row and creates a role object.

- Multi-valued Data Handling: It handles comma-separated values in specific columns (child entitlements and owners) by converting them into arrays.

- Output: It converts the processed data (including offset, total count, and roles) into JSON format and logs it to the console.

- Error Handling: It includes basic error handling to log any issues during the process.

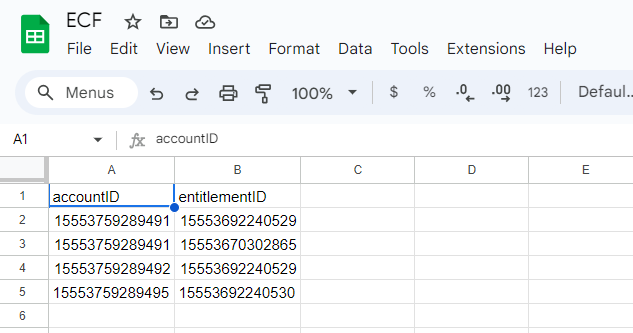

Role-Accounts membership import:

The provided image shows a sample role to account membership data stored in Google sheet.

The code below reads the data in the sheet and transforms it into a response as per the API spec defined by ECF.

const { google } = require('googleapis');

const fs = require('fs');

const readline = require('readline');

// Load credentials from JSON file

const credentials = require('./utils/external-connector-framework-09842e2d8788.json');

// Create a new JWT client using the credentials

const auth = new google.auth.JWT(

credentials.client_email,

null,

credentials.private_key,

['https://www.googleapis.com/auth/spreadsheets']

);

// Create a new instance of Google Sheets API

const sheets = google.sheets({ version: 'v4', auth });

// ID of the Google Sheet

const spreadsheetId = '1tZyExhNelfLaI68oBI3DwWly2p1xfY7VJ1k_CaOzoP0';

// Function to read data from the Google Sheet with offset and pageSize

async function readData() {

try {

const headerRange = 'Roles_Memberships!1:1'; // Range for header row

const headerResponse = await sheets.spreadsheets.values.get({

spreadsheetId,

range: headerRange,

});

const headers = headerResponse.data.values[0];

// Calculate range based on offset and pageSize

const dataStartRow = 2; // Offset starts from data row 1 (after header)

const range = `Roles_Memberships!A${dataStartRow}:F`;

const response = await sheets.spreadsheets.values.get({

spreadsheetId,

range,

});

const rows = response.data.values;

if (rows.length) {

const count = rows.length;

const role_memberships = rows.map(row =\> {

const membership = {};

headers.forEach((header, index) =\> {

membership[header] = Number(row[index]);

});

return membership;

});

console.log(JSON.stringify({

count: count,

role_memberships: role_memberships

}, null, 2));

} else {

console.log(JSON.stringify({

count: 0,

role_memberships: []

}, null, 2));

}

} catch (err) {

console.error('The API returned an error:', err);

}

}

// Call the function with offset and pageSize

readData(0, 2);

Explanation: This code reads role-account membership data from a Google Sheet and converts it into a JSON format suitable for ECF (External Connector Framework).

Here's a breakdown of the key steps:

- Authentication: It authenticates with the Google Sheets API using a service account (credentials loaded from a JSON file).

-

Data Retrieval:

- It retrieves the header row to get column names.

- It defines the data range starting from the second row (after the header).

- It fetches the data from the specified range in the Google Sheet.

-

Data Processing:

- It checks if any data exists.

- If data exists, it iterates through each row and creates a membership object.

- It iterates through each header and assigns the corresponding cell value (converted to a number) as a property in the membership object.

-

Output and Error Handling:

- It converts the processed data (including total count and an array of membership objects) into JSON format and logs it to the console.

- It includes basic error handling to log any issues during the process.

This code assumes all data in the sheet represents numeric IDs. You might need to modify the conversion step (Number(row[index])) if your data has different types.

Account Provisioning:

This section provides an example of account provisioning. This uses sample hardcoded account data. Ideally this will be received by your custom connector from EIC when create account task is provisioned. You should read this account data from request body and use it for creating the account in the target application.

const { google } = require('googleapis');

const fs = require('fs');

// Load credentials from JSON file

const credentials = require('./utils/external-connector-framework-09842e2d8788.json');

// Create a new JWT client using the credentials

const auth = new google.auth.JWT(

credentials.client_email,

null,

credentials.private_key,

['https://www.googleapis.com/auth/spreadsheets']

);

// Create a new instance of Google Sheets API

const sheets = google.sheets({ version: 'v4', auth });

// ID of the Google Sheet

const spreadsheetId = '1tZyExhNelfLaI68oBI3DwWly2p1xfY7VJ1k_CaOzoP0';

// Function to write data to the Google Sheet

async function writeDataToSheet(data) {

try {

const values = [Object.values(data)];

const response = await sheets.spreadsheets.values.append({

spreadsheetId: spreadsheetId,

range: 'Accounts!A:A', // Assuming data will be written to Sheet1

valueInputOption: 'RAW',

requestBody: {

values: values

}

});

console.log(`${response.data.updates.updatedCells} cells appended.`);

} catch (err) {

console.error('The API returned an error:', err);

throw err;

}

}

// Example data to write to the Google Sheet

const data = {

"accountID": "1006",

"accountClass": "NA",

"accountType": "NoType",

"customproperty1": "McNeil",

"displayname": "N",

"name": "MNeil"

};

// Call the function to write data to the Google Sheet

writeDataToSheet(data);

Explanation The code utilizes the Google Sheets API to write data to a specific spreadsheet. Here's a summary:

-

Setup:

- Imports necessary libraries for Google Sheets API, file system access, and JSON parsing.

- Loads credentials for authentication from a JSON file (

external-connector-framework-09842e2d8788.json). - Creates a JWT client using the loaded credentials to authorize API calls.

- Initializes a Google Sheets API v4 client instance.

-

Configuration:

- Defines the ID of the target Google Sheet (

spreadsheetId).

- Defines the ID of the target Google Sheet (

-

Data Writing Function (

writeDataToSheet):- Takes a data object as input.

- Converts the data object's values into a two-dimensional array (

values). - Uses the Google Sheets API (

sheets.spreadsheets.values.append) to append the data to the specified spreadsheet: spreadsheetId: ID of the target spreadsheet.range: Target range for writing data (Accounts!A:Ain this case, assumingSheet1andcolumn A).valueInputOption: Sets the format of the provided data (RAW in this case).requestBody: Contains the data to be written (values array).- Logs the number of cells successfully updated in the console.

- Includes error handling to catch potential API errors and log them to the console.

- Example Usage:

- Defines a sample data object with various account properties. This would be the task data that you receive from the API body.

- Calls the

writeDataToSheetfunction with the sample data object. - This code demonstrates how to connect to Google Sheets via the API, authenticate, and write data to a specific sheet in a specific format. You can modify the data object and target range (

Accounts!A:A) to suit your needs.

Access Provisioning ( Add a role to an account)

Here is a sample code that can be utilized to grant role access to an account. This code includes hardcoded input data for demonstration purposes. Typically, your custom connector would receive this data from EIC when an 'add access' task for a role is provisioned. In practice, you would extract this data from the request body and proceed with further processing.

const { google } = require('googleapis');

const fs = require('fs');

// Load credentials from JSON file

const credentials = require('./utils/external-connector-framework-09842e2d8788.json');

// Create a new JWT client using the credentials

const auth = new google.auth.JWT(

credentials.client_email,

null,

credentials.private_key,

['https://www.googleapis.com/auth/spreadsheets']

);

// Create a new instance of Google Sheets API

const sheets = google.sheets({ version: 'v4', auth });

// ID of the Google Sheet

const spreadsheetId = '1tZyExhNelfLaI68oBI3DwWly2p1xfY7VJ1k_CaOzoP0';

// Function to write data to the Google Sheet

async function writeDataToSheet(data) {

try {

const values = [Object.values(data)];

const response = await sheets.spreadsheets.values.append({

spreadsheetId: spreadsheetId,

range: 'Roles_Memberships!A:A', // Assuming data will be written to Sheet1

valueInputOption: 'RAW',

requestBody: {

values: values

}

});

console.log(`${response.data.updates.updatedCells} cells appended.`);

} catch (err) {

console.error('The API returned an error:', err);

throw err;

}

}

// Example data to write to the Google Sheet

const data = {

"accountID": "15553759289495",

"entitlementID": "15553692240530"

};

// Call the function to write data to the Google Sheet

writeDataToSheet(data);

Explanation The code provisions account-role memberships (access) by writing data to a Google Sheet (for logging) in this example. It simulates the process, but for real-world use, you'll need to replace the Google Sheets part with calls to your system's actual access control API.

The code defines a function writeDataToSheet that takes an object with accountID and entitlementID (role ID). This function currently logs the data, but should be replaced with your system's access provisioning logic (calling an API or updating a database).

Sample Node JS server application code (Integrates Google Sheets)

The document above details individual operations. However, for a comprehensive example that incorporates all these functions, please refer to the sample project available at this link"